One in seven USB drives with company data are lost or stolen, according to a survey recently conducted by Google’s Security, Privacy and Abuse Research team. A seemingly straightforward mitigation would be to mandate that all USB drives with corporate data use hardware-based encryption.

However, this still means that one in seven of those drives are going to get lost or stolen, so administrators have to implicitly place a lot of trust in that hardware. We also place trust in other secure hardware devices, like OTP tokens (e.g., RSA tokens) and Universal 2nd Factor (U2F) tokens (e.g., Yubico’s YubiKeys).

Due to the difficulty of verifying a device’s security properties, purchasing decisions often have to made based on secondary factors, like vendor reputation. This creates an environment where software-oriented people just assume hardware is secure because life becomes much more difficult if that assumption is questioned. Unfortunately, the reader’s life is about to become much more difficult.

Auditing USB Key Hardware Components

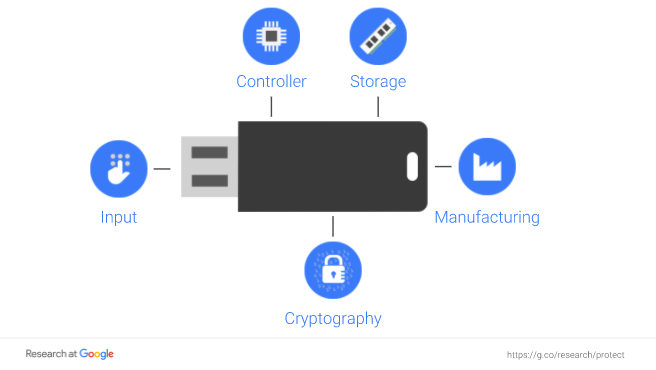

Elie Bursztein (@elie), Jean-Michel Picod (@jmichel_p), and Rémi Audebert (@halfr) recently presented “Attacking Encrypted USB Keys the Hard(Ware) Way” at Black Hat 2017, where they took on the challenging task of auditing the hardware components of encrypted USB keys. They established five possible types of weaknesses and demonstrated exploits of each type. This structure and presentation of the talk made it stand out in the field of talks, as it was accessible even to those who weren’t familiar with hardware security.

The number of times the word “FAIL” appeared in their slides is certainly indicative of the state of cryptographic hardware implementations. The most impactful takeaway is that they found that different models from a common manufacturer might have substantially different security postures—one might be suitable to protect against all but state-level actors, while another might be built such that even an opportunistic hacker can extract your data.

Vendor reputation is, apparently, not a reliable assumption when it comes to security properties, which means there’s even less information for buyers to use during the purchasing decision. Instead, the speakers concluded that NIST standards provide useful information—not conforming to the standards is likely a negative—but they aren’t sufficient to actually make claims about the security of a device.

This is left as an open question: we need better standards, so does anybody want to make them (and, more onerously, get everyone to agree on them)?

Counterfeiting Security Tokens

While the Google team pulled apart USB drives to audit them, Joe FitzPatrick (@securelyfitz) and Michael Leibowitz (@r00tkillah) detailed their efforts at producing counterfeit security tokens at DEF CON 25, in an entertaining talk titled, “Secure Tokin' and Doobiekeys: How to Roll Your Own Counterfeit Hardware Security Devices”.

The first victim was an OTP token, similar to an RSA token. They decided to completely ignore the secure hardware that stored the cryptographic seed, since that’s intended to be hard to break into. In the world of copyright law, there’s a concept known as the “analog loophole:” you can have all the DRM in the world, but at some point, you have to output an analog signal, i.e., sound or light waves, and those can be captured.

This team took a similar approach, and intercepted the signal going to the seven-segment LCD display. By waiting for all the segments to light up as different OTP codes are displayed, they were able to deduce that a given signal on a given pin meant that a particular segment was going to turn on or off.

By packing this detection logic and a Bluetooth transmitter into the token’s housing, they could broadcast the state of the display without ever having to semantically understand what the current OTP code was. This attack would require access to the victim’s OTP token, so the threat model requires an attacker to have physical access to the token for at least several minutes, which, if you’re taking good care of your tokens, would probably only happen when you’re asleep.

A successful attack would allow malicious attackers within Bluetooth range (at most, 100 meters if you have line-of-sight view of the token) to know your OTP codes as they were generated. Then, all they would have to do is phish your login credentials to gain persistent access to the account the token protects.

Their second hack was producing a counterfeit YubiKey U2F token. The advantage in doing so is that the “identity” of the key is burned in when it ships, and the security relies on that staying secret. If a malicious actor is able to retain the secret information that they burn into a fake YubiKey, and then convince a user that it’s a legitimate YubiKey, they can later impersonate that token.

Fitzpatrick and Leibowitz showed their incremental attempts at shrinking their PCB and components to fit into a YubiKey form factor. They were able to 3D print a Yubikey replica that could hold their counterfeit circuit board, and demonstrated that Yubico’s tools treated it like any other YubiKey. This attack could be implemented in the supply chain, by swapping out authentic YubiKeys for these fakes, but supply chain interference is always a difficult task. A more feasible scenario, if the attacker has specific targets, would be to hand them out as a “public service” at an infosec event.

Seeking Hardware Transparency

These attacks certainly serve to instill some distrust of “secure hardware,” which raises the question of: “What do we do when we don’t trust the circuitry and firmware?” One possible approach using open-source hardware was described by 0ctane in their DEF CON talk, “Untrustworthy Hardware and How To Fix It: Seeking Hardware Transparency”.

Often, the notion of “open-source hardware” sounds like a great idea, except for the not-so-tiny complication of actually producing the hardware from the specifications. Recent advances in consumer-grade CNC machines have started to make homemade printed circuit boards (PCBs) feasible, but it’s still time- and material-intensive to iterate.

Field-programmable gate arrays (FPGAs) are one option in this situation. An FPGA is a piece of hardware that can best be likened to software-defined microchip: the user sends a definition of the circuitry they want, and the FPGA configures itself to implement that hardware definition. They are usually used for prototyping, debugging hardware, or performing complex digital signal processing. The curious reader may be interested in taking a deeper dive into what FGPAs actually are, but that’s beyond the scope of this discussion.

0ctane proposes a cryptographic purpose: simulating a particular definition of a trusted CPU (in this case, OpenRISC), and running Linux and the desired cryptographic software on top of this simulated processor. The downside to using FPGAs is that the customizability comes at the cost of speed, when compared to silicon CPUs. Top-of-the-line FPGAs still run slower due to the need to buffer input and output between the cells, rather than the circuitry being optimally laid out, as is the case for a silicon chip.

For this application, however, that might be an entirely acceptable tradeoff: slower operation and computation in exchange for knowing that your code is running on a trusted stack, from the hardware up. In addition, there are some unanswered questions as to how sound this approach is. It’s unclear to the author whether an FPGA is harder for an attacker to surreptitiously insert malicious logic than it is with a CPU, since this approach requires trusting the FPGA and its programmer software (running on an untrusted CPU).

There’s also an assumption made that OpenRISC is itself more secure than an Intel or AMD x86 chip. As we’ve seen in case after case, just being open-source doesn’t mean that software is more secure. It does provide visibility that you don’t otherwise have, but you either have to read all the software and convince yourself of its security properties, or you have to also place trust in the OpenRISC project to make a secure processor. 0ctane’s proposal is an interesting one, but it needs more data and discussion to be a viable approach; we look forward to hearing more from them in the future.

FPGAs aren’t the solution to all of our concerns about hardware security, and you have to place your trust somewhere, unless you can oversee the entire design and supply chain. This approach could potentially be valuable in the future when trusted hardware is essential, such as a standalone code-signing computer with the keys in a hardware security module, which are designed to break before revealing their private information. There are definitely issues with this solution as it stands, though. Hardware hackers are a tenacious bunch, such as when Mikhail on our research team hacked our office doors. In reality, there doesn’t seem to be much of a supply-chain threat if you’re buying your hardware from a trusted supplier. However, you might want to think twice before using a YuibKey that somebody hands you at a crypto meetup...