Investigating and recovering from security incidents are extremely stressful and time-consuming. Talking about what happened poses a different set of challenges, and many organizations struggle with effective communication.

Organizations are increasingly developing incident response playbooks to plan out in advance what steps to take in case of a security breach—such as an employees accessing files without authorization, a lost computer, or a server compromised by outside attackers. In many cases, these playbooks focus heavily on the technical details and the operational tasks needed to recover and get back to business, and don't always go in-depth on how to communicate what is happening. A recent paper in the Computers and Security journal from a team of academics from the United Kingdom's University of Kent and University of Warwick laid out a framework for how organizations should communicate after a security incident.

Some organizations respond to security incidents better than others. Very few organizations are able to talk about security incidents well—where they explain what is happening, what they are doing, and what people can explain. And whether or not the organization is perceived as doing a good job of handling the incident is often tied to their communications. In fact, an organization handling the public discussion poorly can make the situation worse, the team said in the paper.

"The way that businesses communicate to their customers and external stakeholders following a data breach can impact their share price and reputation to such an extent that they can be considered cyber crises...these also have further implications for the business’ continuity and resilience," wrote Jason Nurse, an assistant professor of cybersecurity at the University of Kent, and Richard Knight, of University of Warwick.

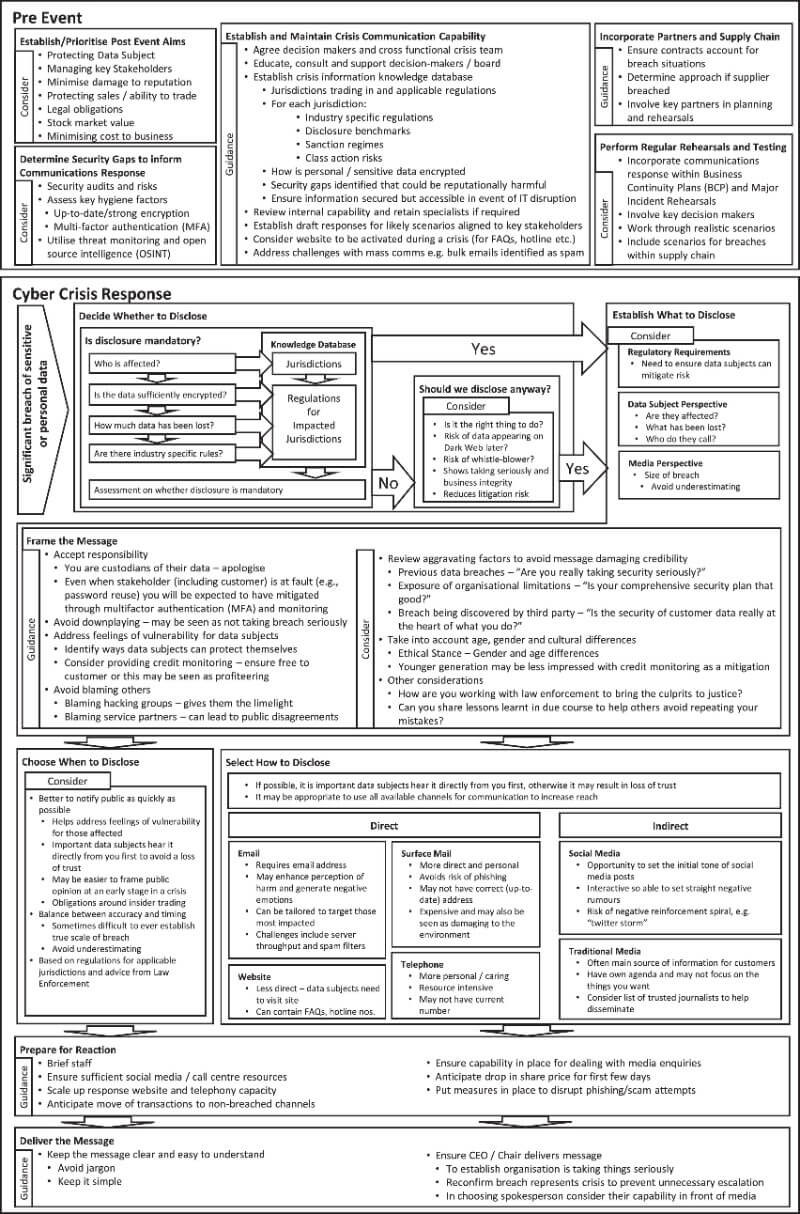

The full framework covers a range of questions to ask before a security incident and during an incident. (Source: Computer and Security Journal)

The massive data breach at Equifax in 2017 was bad enough in the number of individuals impacted (143 million people), but the company's communications missteps did not inspire confidence in its ability to handle an incident of this magnitude. For example, the company created a brand-new website to provide information about the breach instead of posting on its site, causing confusion among people whether the site was legitimate or now. The company's Twitter account repeatedly told users to go to the wrong domain to get information about the link—users were mistakenly sent to a fake domain set up specifically to show why the domain name was problematic.

Poor communications doesn't just impact the company's reputation or perception of how well it is handling the crisis. The drop in stock price could be "the 'knee jerk' reaction of ill-informed investors," the researchers wrote.

Blaming partners may incite public disagreements which may be considered an ill-advised crisis strategy.

One of the challenging aspects of discussing a breach is the fact that the organization has to straddle a fine line between being the victim (the breach happened) and being responsible for the incident (a mistake was made which made the breach possible). While the organization may be inclined to view itself in the first group, consumers for the most part do not.

"With incidents involving an unintentional exposure of data, typically the organisation (via its employees or stakeholders) is indisputably at fault and thus cannot reassign blame away from itself or act as a victim," Nurse and Knight wrote. Ticketmaster initially attempted to shift blame for its data breach to the third-party supplier for a faulty software component on its website. That strategy backfired on the company because the supplier noted that Ticketmaster should never have used the software in the manner it had, and had the supplier been aware of it, would have advised against doing so.

"Blaming partners may incite public disagreements which may be considered an ill-advised crisis strategy," Nurse and Knight wrote.

While blaming others is tempting, it can be "detrimental to a business' attempt to protect its reputation," they wrote in the paper. When organizations take responsibility and are seen to be proactively trying to address the problem, such as through "the use of apologies and actions to mitigate the risk of harm to the data subject," are perceived in a more positive manner. The paper also noted the risk of taking responsibility, since it may open the door to lawsuits. However, the regulators don't hestiate to impose "onerous sanctions" if the organization seems to not be following their obligations.

Organizations will be skwered for not disclosing promptly—and regulations such as the European Union's General Data Protection Regulation that will result in hefty penalties for not doing so—but they will also be criticized if they are perceived as not knowing enough, or holding information back. BA had to revise the number of people affected from 380,000 to 429,000, prompting concerns that the company did not take the time to understand the situation or to provide accurate information.

"The risk of litigation is now outweighed by the positive effects of organisations taking responsibility for the crisis and the reduced risk of fines from relevant Data Protection Authorities," the paper said.

Nurse and Knight development a framework to provide guidelines to organizations on what to think about when making decisions on how to communicate. The "comprehensive playbook" considers what organizations should think about "Before Cyber Crisis"—such as knowing what industry-specific regulations to be concerned about and the disclosure benchmarks, identifying decision makers, and putting security controls in place—and "Cyber Crisis Response"—which covers the questions to ask during the actual incident. The "Before" section also asks organizations to consider before getting in crisis mode its goals post-breach, since its communications strategy would be different if the intent is to protect sales and stock market price, as opposed to meeting legal obligations.

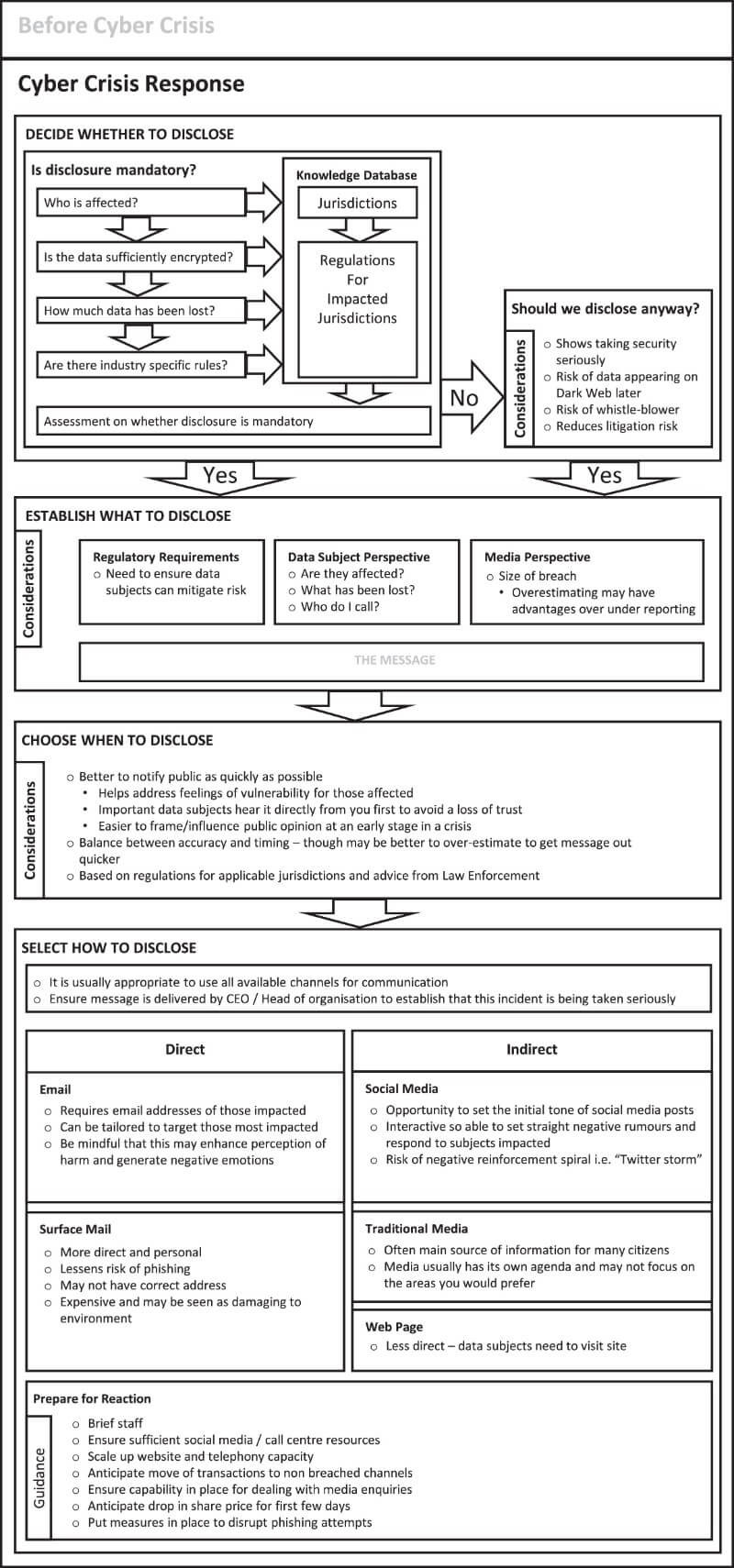

The "Response" section focuses on four questions: Whether to disclose, what to disclose, when to disclose, and how to disclose. Each section is broken down into more questions. The flowchart steps through each step of the decision-making process. For example, an organization has to decide whether to disclose—and the first question is whether it is mandatory. If it is, there are specific information to provide. If it is not mandatory, the next question asks, "Should we disclose anyway?" (The flowchart says, "Yes.")

The final step, "How to disclose" explores different methods to use and includes additional questions to consider in order to make that decision. The framework could provide "a codified structure to facilitate the rollout of crisis communication capability," the paper said.

Incident response playbooks should contain information such as who to call to get outside help—such as the ISP during a distributed denial-of-service attach—as well as technical details on things to do during the course of the investigation. The playbook also needs to consider communications activities, such as setting up a mechanism for affected consumers to ask questions or to get additional information, or how ongoing updates are provided. Organizations should plan beforehand which departments and key personnel would be involved in crafting and disseminating public statements, and who would decided what information can be shared, and when.

Detailed questions from the framework for the "during" phase of the incident.