Ransomware has exploded in the past two years, as programs with names like Locky and Wannacry infect hosts in high-profile environments on a weekly basis. From power utilities to healthcare systems, ransomware indiscriminately encrypts all files on the victim’s computer and demands payments (usually in the form of cryptocurrency, like Bitcoin).

Tracking Desktop Ransomware Payments End to End

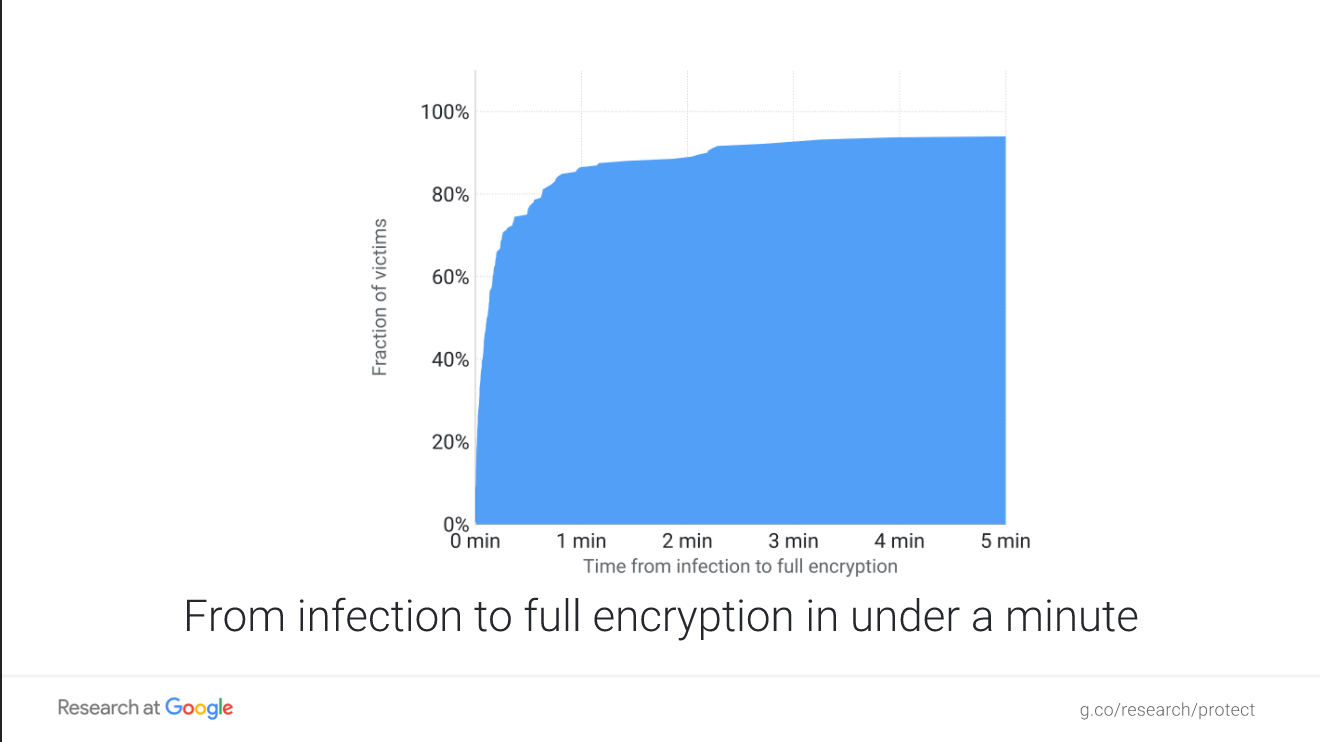

While the system is encrypting, the victim can potentially save their files by pulling the power cord, if they were able to realize they ran shady software in time. However, recent work presented at Black Hat 2017 by Elie Burszstein, Kylie McRoberts, and Luca Invernizzi showed that the median ransomware victim has less than one minute to react before all of their files are encrypted:

Source: elie.net

Source: elie.net

Worms have always been been focused on finding and infecting victims as soon as possible, ideally through systems that require no user interaction. The short time-to-compromise and time-to-spread of these types of malware means threat intelligence feeds have very little time to identify the indicators of compromise and distribute updates before a new variant could potentially infect scores of victims.

One approach that is often used in addition to intel feeds is real-time detection based on the behavior of a program. There have recently been advances in real-time detection presented at top security conferences, and they explore different strategies that may prove useful in other forms of malice-detection.

Tools to Fight Ransomware Attacks: ShieldFS

One potentially useful anti-ransomware tool that was presented at Black Hat 2017 was ShieldFS, created and presented by a group of researchers from Politecnico di Milano and Trend Micro. Subtitled, “The Last Word in Ransomware Resilient File Systems,” the insight in this project is applying machine learning (and the right type of machine learning) to operating-system-level file access patterns.

Implemented as a Windows filesystem filter, running in the kernel, ShieldFS isn’t a filesystem proper, but rather adds functionality to the underlying filesystem. Two common challenges in machine learning are feature engineering (how to come up with a list of descriptive “features” about the input) and feature selection (figuring out which of those features productively contribute to generating the correct answer).

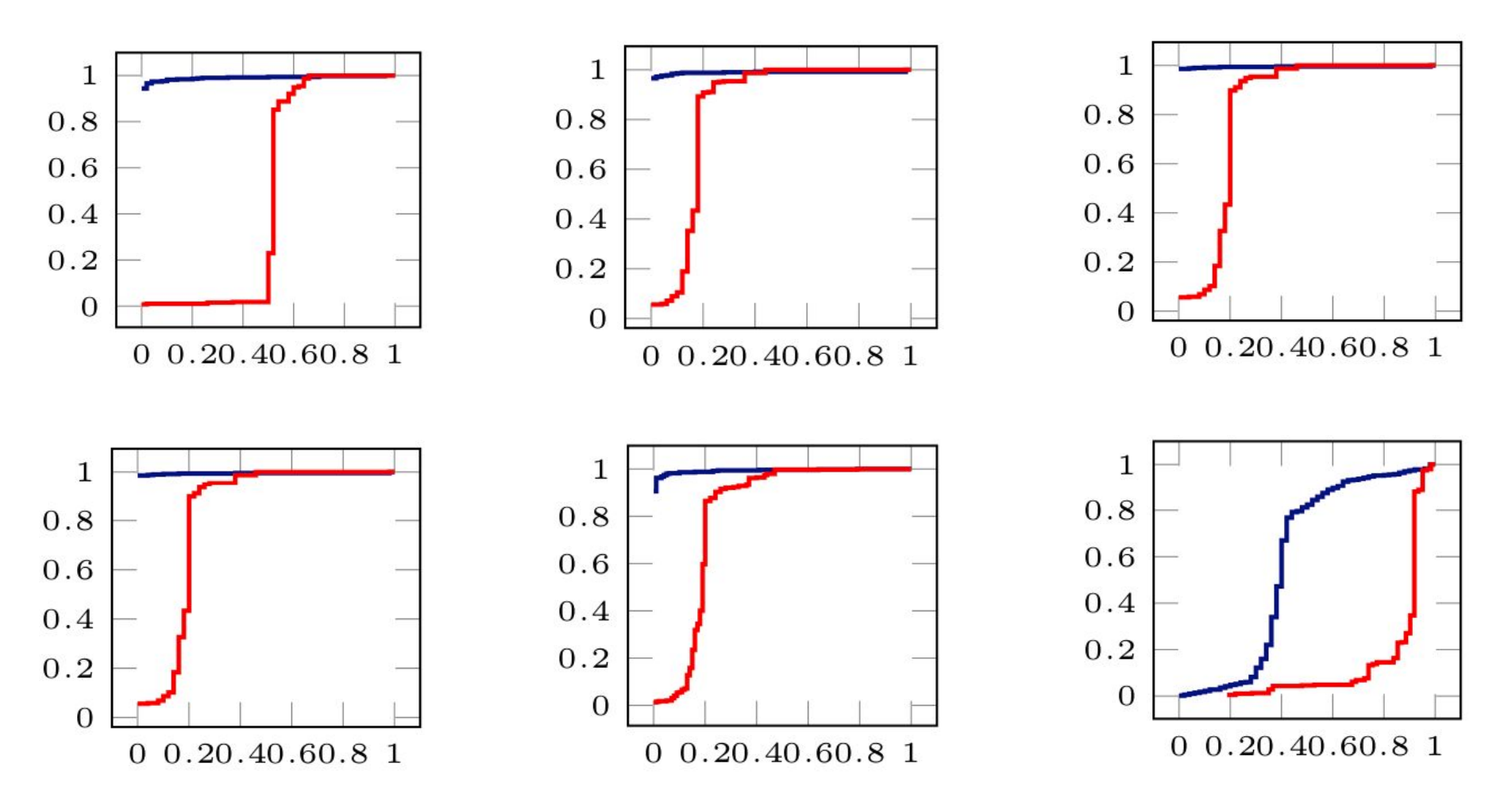

Feature engineering in ShieldFS seemed straightforward, since many of the features were simple counts of types of events the filter observed, such as directory listings and writes. They were also fortunate that so many of the features showed obvious qualitative differences between malicious (red) and benign (blue) programs, making feature selection also a high-confidence process:

Source: shieldfs.necst.it

Source: shieldfs.necst.it

This sets the researchers up for success. Using binary inspection (called “static analysis”), they were able to supplement results based on operation statistics (“dynamic analysis”). The team implemented a multitiered machine learning model to preserve long-term trends but also be able to react to new behavioral patterns.

By using a copy-on-write policy, if a process started to exhibit ransomware behavior, they could kill it and restore all the copies. This system detected ransomware with a 96.9% success rate, but even the other 3.1% of cases still had the original content stored, so 100% of encrypted files were able to be restored.

One general takeaway from this project is the value in starting with easy-to-derive features before getting fancy, as is often the temptation in machine learning. Additionally, the biggest downside of dynamic analysis (observing the process) is that it’s hard to undo operations once you decide it’s malicious.

By tweaking the space-efficiency algorithm of copy-on-write, they establish the ability to completely restore all files to the state they were in at the start of the process, mitigating this downside, while avoiding the performance and accuracy penalty of statically analyzing the binary while stalling execution, which is a common anti-virus/endpoint-protection technique.

3D Printing Security Concerns

In a very different context, additive manufacturing, i.e., “3D printing,” a concern about malware has arisen, given that the printers are IoT devices. We see security vulnerabilities with embedded hardware and IoT devices all the time, such as Duo Labs’ recent work attacking a “smart” drill.

At USENIX Security 2017 this past week, a team from Georgia Tech and Rutgers presented a solution to this problem, titled, “See No Evil, Hear No Evil, Feel No Evil, Print No Evil? Malicious Fill Patterns Detection in Additive Manufacturing”. We recently criticized a solution that relied on the security of an open hardware project for just pushing the question of trust down a level, onto the physical components that the firmware runs on.

The USENIX work set aside entirely this “turtles all the way down” approach to trust, using side-channels to observe the behavior of the 3D printer and compare it to a model of what it should be doing. They establish three layers of verification that detect whether either the printer or the controlling computer are compromised (or broken): acoustic output, visual progress, and embedding nanoparticles in the materials so that fraudulent prints would look significantly different when imaged via, for example, a CT scan.

By printing the example prosthesis under assumed-good conditions, they were able to train machine learning models about what information legitimate printing leaked via these side channels. Using three different models of printer, they were able to achieve 100% accuracy at detecting modified printer behavior in real-time.

At the collocated Workshop On Offensive Technologies (WOOT), a different team, from Ben-Gurion University of the Negev, University of South Alabama, and Singapore University of Technology and Design, presented precisely such an attack. This paper, “dr0wned – Cyber-Physical Attack with Additive Manufacturing”, presented a start-to-finish attack on the reliability of a quadcopter rotor.

The attack started with a WinRAR vulnerability disguised as a PDF, which was previously patched, but was used because the team made the sadly reasonable assumption that the user doesn’t routinely update their computer. They also turned it into a worm, which scans for design files with the targeted geometry and comments-out critical lines to sabotage the parts. Successful sabotage of this type requires that the part holds up to visible inspection and some amount of physical testing:

Source: usenix.net

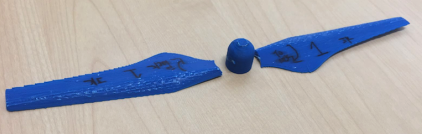

Source: usenix.net

In the above photo, the top propellor is the intended device, while the bottom one has been sabotaged. Their approach to sabotage is two-fold: inserting cavities at structurally weak points, and changing the printer’s path so that, instead of being printed in a continuous path across the boundary between the blades and the cap, the cap and two blades are printed as three separate parts that are stuck together, which creates a significant structural weakness.

In both lab and real-world testing, the sabotaged propeller blades eventually flew off when they rotated fast enough:

The authors of this attack compared it to the previous state of the art in defense, which used acoustic detection methods with only a 77% success rate; they deem this “insufficient” for detection of this attack, which certainly seems like taking a rosy view. It would be fascinating to see these two research projects pitted against each other; the defenders are likely to have substantial success, because this attack relies on dramatically changing the path of the printer. The approach that used gold microrods in the material and imaged the result with a CT scan seems likely to detect substantial path changes, since this is precisely the attack the imaging was intended to detect.

In addition to the security properties tested by the detection work, it also provides a valuable quality analysis tool. By evaluating the printers as they print, these models can prevent the waste of valuable source material by halting the job as soon as the machine learning models indicate that the printer is malfunctioning.

While infosec defenders normally think of side-channels as something to loathe, since that’s how attackers can get information, in this case, the saying “a good defense is a strong offense” is accurate. By acting as an “attacker,” the producer of the plans is able to gain information about what the printer is actually doing in order to provide confidence in the integrity of the results. Moreover, it accomplishes this without having to put trust in any of the production hardware.

These are just two examples of advances in real-time detection of malicious software. When attacks on hardware erode the trust we in the software world place on it, new challenges arise that can’t be solved simply by using signature-based detection. In addition to the advances these research projects contributed in the individual fields of detection of ransomware and malfunctioning additive manufacturing tools, respectively, they discuss techniques that can apply to other machine learning scenarios in security.

Observing the actual effects of program execution (whether it be 3-D printer movements or filesystem actions) may prove to be more efficient than trying to analyze and reason about a program or data file.