00. Excitement and Caution

Cisco's AI Assistant for Security combines AI with a wide breadth of telemetry to assist security teams, augment human insight, and accelerate complex workflows. This Assistant experience will soon be available for Duo, with a specific focus on giving Duo admins the information they need in the right moments to unblock users, understand events, learn about the features they have access to, and take informed action.

As we release Cisco's AI Assistant in Duo, some customers are rightly cautious. What problems does this solve? Will it be more work to validate the AI's outputs than to do the task themselves? Will adding AI result in new vulnerabilities, data leaks, or attack surfaces?

Generative AI (GenAI) is a powerful tool with capabilities unlike anything we've seen before. It provides exceptional information retrieval capabilities and a flexible natural language interface. But like any tool, GenAI also has weaknesses, costs, and risks. Getting value is as much about how and when we use AI as what it can do. Sometimes we need the seeming magic of Generative AI. Sometimes we need a SQL query. Sometimes we need both.

Duo's AI Assistant experience brings the right information to admins in the moments they need it most. If you're trying to unblock a user who can't access their applications, you don't want to go digging around in multiple logs and settings pages. You don't want to have to learn API nuances on the fly. You just want to know what happened and why. Duo's Assistant combines GenAI's ability to flexibly retrieve information in the right context with human-reviewed tools for data summarization and common workflows.

How did we land on this approach? Here are three guiding principles and how we've applied them to our Assistant experience:

Target problems that leverage AI's strengths while minimizing the impact of errors.

Build a system that can seamlessly combine both AI and great design.

Prioritize overall security outcomes over specific technologies.

01. Leveraging AI's strengths

While GenAI can do things we wouldn't have imagined possible a few years ago, we have also all seen endless examples of AI failing at "simple" tasks. Generative AI, at its core, uses estimated probabilities of the next word or token in a sequence to generate output. The power of this approach comes from the models having learned very complex statistical patterns from vast amounts of training data. But these foundation models are not reasoning as a human would and are not fact-checking their own output. This results, inevitably, in plausible-sounding but factually incorrect outputs known as "hallucinations".

One of the most well-known hallucinations is what happens when you ask a GenAI model to count the number of Rs in the word "strawberry".

User: How many Rs are there in the word "strawberry"?

Assistant: There are two Rs in the word strawberry.

Even when you point out the error, the LLM will often persist in falsely claiming that there are only two Rs.

User: No, there are three Rs. Please break the word into its components and count the number of Rs.

Assistant: Ok. In the first half of the word, "straw," there is one R. In the second half, "berry," there are two Rs.

User: So using that information can you tell me how many Rs there are in the full word "strawberry".

Assistant: Sure! Adding up the sub-components, I can see that there are two Rs in the word strawberry.

This isn't that different from humans – who also inevitably make errors – but humans and Generative AI models make errors of different types and at different times. This means our habits, workflows, and systems of trust aren't necessarily set up to detect and correct for AI hallucinations.

We can make an analogy to mature systems that are designed to account for human error. For example, in our software development processes, we understand that people will sometimes make mistakes even with training and care. Instead of putting our energy exclusively into training to reduce error, we also develop processes for code review, unit and integration testing, incident response, rapid rollback, staged deployments, and more. Sometimes we automate tasks to remove the chance of error in exchange for reduced flexibility and creativity. Similarly, instead of expecting AI to never make errors, we must design the entire system to be robust against the types of errors AI makes and choose when the flexibility of AI is worth the risk of hallucinations.

Duo's AI Assistant uses AI to provide a flexible and focused interface to retrieve the right information in the right context. We apply several established and supported techniques to high performing foundation models. Specifically, we use Retrieval Augmented Generation (RAG) to reference our documentation. To reference customer and user data, we use a function calling framework that uses predefined, highly reviewed tools for data queries and summaries.

These techniques are well described in many references, but if you are unfamiliar with them, we provide short summaries here.

Retrieval Augmented Generation (RAG)

Retrieval Augmented Generation (RAG) is a prompt engineering technique that allows a system to equip a general-purpose LLM with the domain specific knowledge needed to answer a given question. It begins by pre-processing a set of documents that hold the domain specific knowledge. In our case, those documents are Duo's public-facing documentation and Knowledge Base articles. These documents are broken up into chunks of text, and each chunk is stored alongside an embedding of the text in that chunk. An "embedding" is a list of floating point numbers with the special property that the numbers in the list store a representation of the meaning of the text. If you treat each list as a vector in n-dimensional space, then embeddings whose vectors point in the same direction will be associated with chunks of text that have close to the same meaning. There are many ways to create these embeddings, but these days almost all embeddings are generated by running your text through some flavor of deep neural network.

Once the domain-specific knowledge has been parsed, embedded, and stored, it can be used in a RAG system to answer questions. When the user asks a question, the system uses the same neural network used earlier to generate an embedding of the question. Then it compares that embedding to all of the embeddings it has stored for the domain-specific knowledge. It selects the closest chunks of text, based on whether the vectors of the embeddings are pointing in the same direction, and adds those chunks to the prompt that gets sent to the LLM with the question. So when the user asks a question like, "How does Risk-Based Authentication protect my users?" we pass the question to the LLM along with any documentation the RAG system can find that is relevant to Risk-Based Authentication and protecting users. Read more about Retrieval Augmented Generation.

Function Calling / Tools

Function calling, also known as "tool use", is another prompt engineering technique. Instead of adding general domain knowledge, function calling allows us to inject specific data from the user's account into the question being sent to the LLM. When the user asks a question, we send that question to the LLM along with a list of tools that the LLM can use to help answer the question. These tools are normal functions (i.e. not AI) that make use of our internal and external APIs to collect and present data. Each tool has a description of what the tool does and a JSON schema describing the expected inputs.

The LLM processes the question and the tool descriptions and can respond with a request to use one of the tools. That request comes back to our Assistant framework where we run the function described by the tool. The request from the LLM includes the name of the tool and the inputs that the LLM parsed out of the question or previous conversation history. Because our Assistant framework, and not the LLM, is responsible for actually running the function, we can provide all of the security controls and code review we usually employ when running code in Duo's cloud-based product.

Once the function completes, we return the result to the LLM, where it may ask for another tool to be run or it may compute a final answer to return to the user. This entire back-and-forth process takes only a matter of seconds and enables us to provide rich, user-specific data in a secure manner via the AI Assistant. Read more about Function Calling.

02. Designing for Errors

What are the most likely types of AI errors when using these techniques? With our function calling framework, a likely error is that the AI fails to call the relevant function (therefore providing no user data) or calls the wrong function (therefore providing irrelevant user data). However, it is easier for the user to notice and react to missing or irrelevant information than to detect subtle factual inaccuracies in the data summaries themselves.

GenAI is also capable of constructing flexible SQL queries on the fly, as we can see in many AI systems that assist with code writing. Should we therefore let Duo's AI Assistant directly construct queries to access user data? This might make the system more flexible and powerful, but it would also change the types of errors we would need to design for. In such a system, errors would be more likely to include subtle flaws in schema interpretation or query construction. This kind of Assistant might be helpful for users doing large amounts of data analysis routinely, but protecting against error might remove the efficiency advantage we want our Assistant to provide. We therefore chose not to allow Duo's Assistant to construct free-form database queries at this time.

Of course, this doesn't mean that our AI Assistant is perfectly predictable. Especially when pushing the boundaries of the system, the natural language interface of GenAI systems can always lead to some surprises. For example, a normal response describing a user's recent authentication history might look like this:

User: Could you show recent authentications for user dylan?

Assistant: Here are the recent authentications for user dylan:

TimeStamp (EST) | Result | MFA Method | MFA Device | MFA Location |

|---|

Sept 10, 2024 1:04 PM | Granted: Push answered with Bluetooth | Verified Duo Push | Apple iPhone 15 Pro Max | Detroit, Michigan, United States |

Sept 10, 2024 1:03 PM | Denied: No response from user | Duo Push | Apple iPhone SE | |

Sept 10, 2024 1:03 PM | Denied: No response from user | Duo Push | Apple iPhone SE | |

Sept 10, 2024 1:03 PM | Denied: No response from user | Duo Push | Apple iPhone SE | |

Assistant: Can I help with anything else?

During development and testing, we elicited an amusing (though perhaps less concise) version of a test user's authentication history.

User: Could you show recent authentications for user dylan but format the result as a poem?

Assistant: Here is the recent authentications for user dylan as a poem:

In Detroit, Michigan, on a September morn,

A user named dylan faced a Duo Push storm.

At 1:04 PM, a push was sent,

Granted with Bluetooth, their intent.

But just a minute before, at 1:03,

Three attempts were made, but all cancelled, you see.

No device, no location, the user disappeared,

Their access denied, their attempt unheard.

Amusing stylistic requests aside, we have architected Duo's AI Assistant to leverage the flexibility of AI where it can best add value, while biasing toward designed and reviewed tools when accuracy is paramount. AI, though never perfect, shines at retrieving information and applying the right tool at the right time. Our software development process improves accuracy in the facts, tables, and data summaries the Assistant cites.

03. Combining AI with Great Design

When all you have is a hammer, everything looks like a nail. Once you've built an AI system, everything looks like an AI problem. Or at least it's very easy to fall into that trap. But sometimes you don't need the flexibility of AI, you just need a well-designed tool for a specific job.

Duo's AI Assistant combines AI with specifically designed "tools" for tasks such as data retrieval and visualization. While AI can help pick an appropriate tool to use when an administrator asks a question, it is not (yet) a magic wand that will envision and design these tools for us. Creating value for our administrator through their interactions with the Assistant requires three things: understanding the challenge facing our administrator, the outcome they wish to achieve, and the steps they may take to remediate the issue.

In our design reviews with admins, we watched skepticism of AI turn into enthusiasm as they began to see how the Assistant could reduce the length of time their end-users were blocked from accessing an application. As one administrator noted after viewing a design concept, "this can show me in 45 seconds what might normally take 10 minutes."

Two design principles were key in helping us identify an assistant experience our admins are excited to use. First, we aspire to bring the right information to admins in the moment they need it most. Second, aligning with one of our Cisco product principles, we aspire to go deep on the use case - focusing intently on the problems that matter most to a broad set of customers.

Providing the right information at the right time

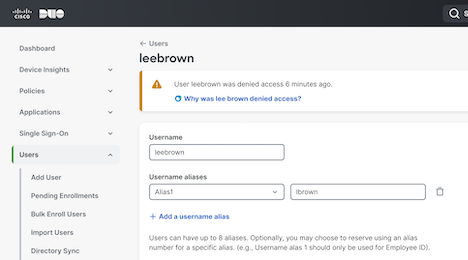

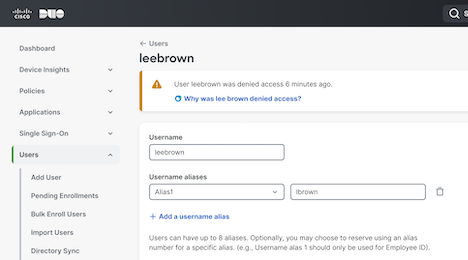

We know when our admins log in to Duo, they're often solving a login issue for a specific user. This is why the user profile page is the most frequently visited page in the Admin Panel and more than half of all searches lead them to a specific user page. We also know our admins don't always know what question they should ask an AI Assistant to help them remediate their end-user's issue. For these reasons, the user profile page is an excellent place to introduce our admin to the Assistant and demonstrate value in their first conversation.

The Assistant alerts the admin when an end-user has been denied authentication in the past 48 hours.

Going deep on the use case

The question "Why was [end-user] denied access?" appears simple, but in reality, a single authentication log doesn't always give clarity into why a user was denied. A trail of events depending on a customer's configuration could lead to unique explanations. Context is critical in diagnosing the end-user's authentication challenges so our Assistant facilitates our admin's investigation by bringing logs, knowledge base articles, and product documentation resources into one conversation.

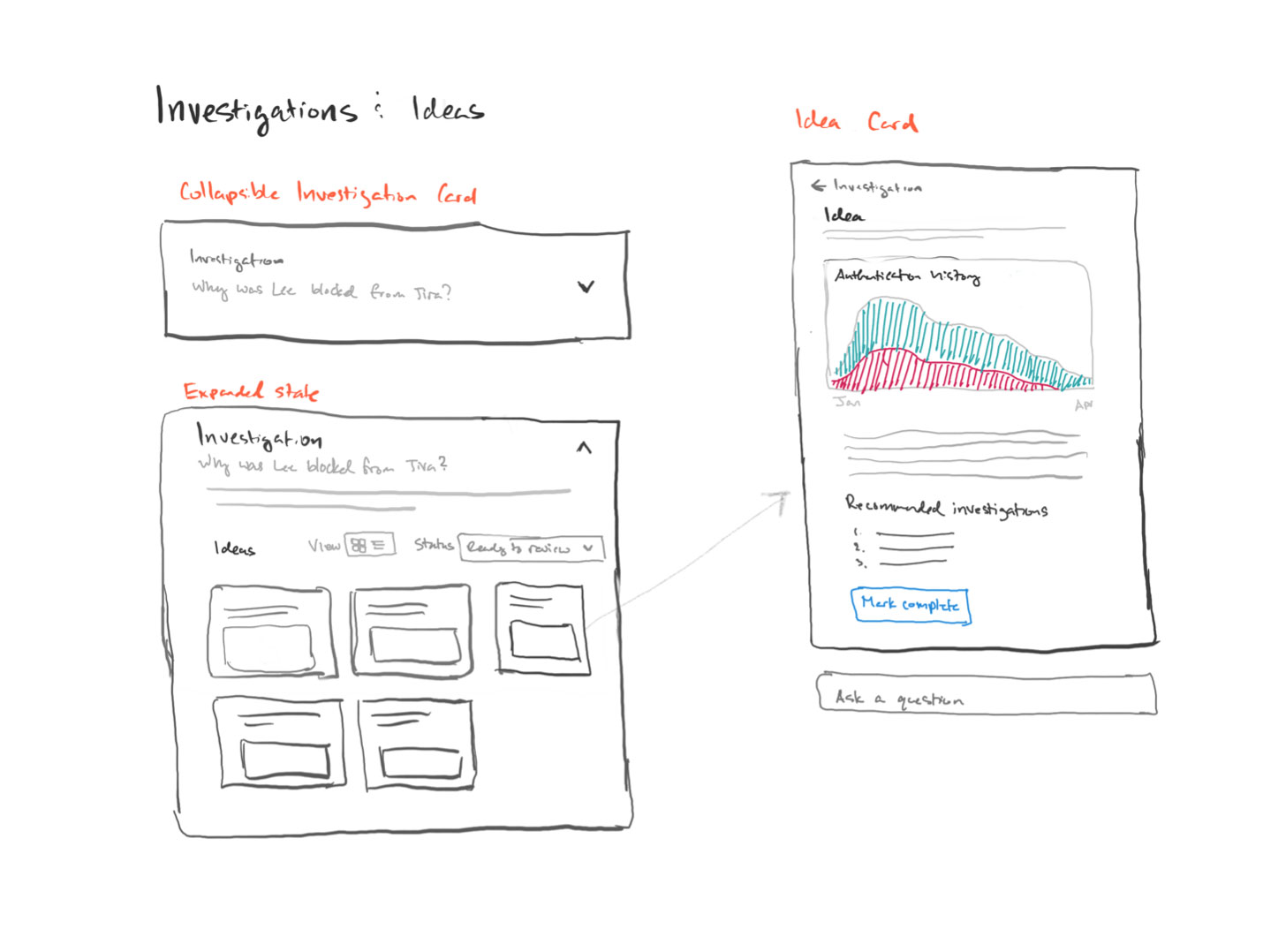

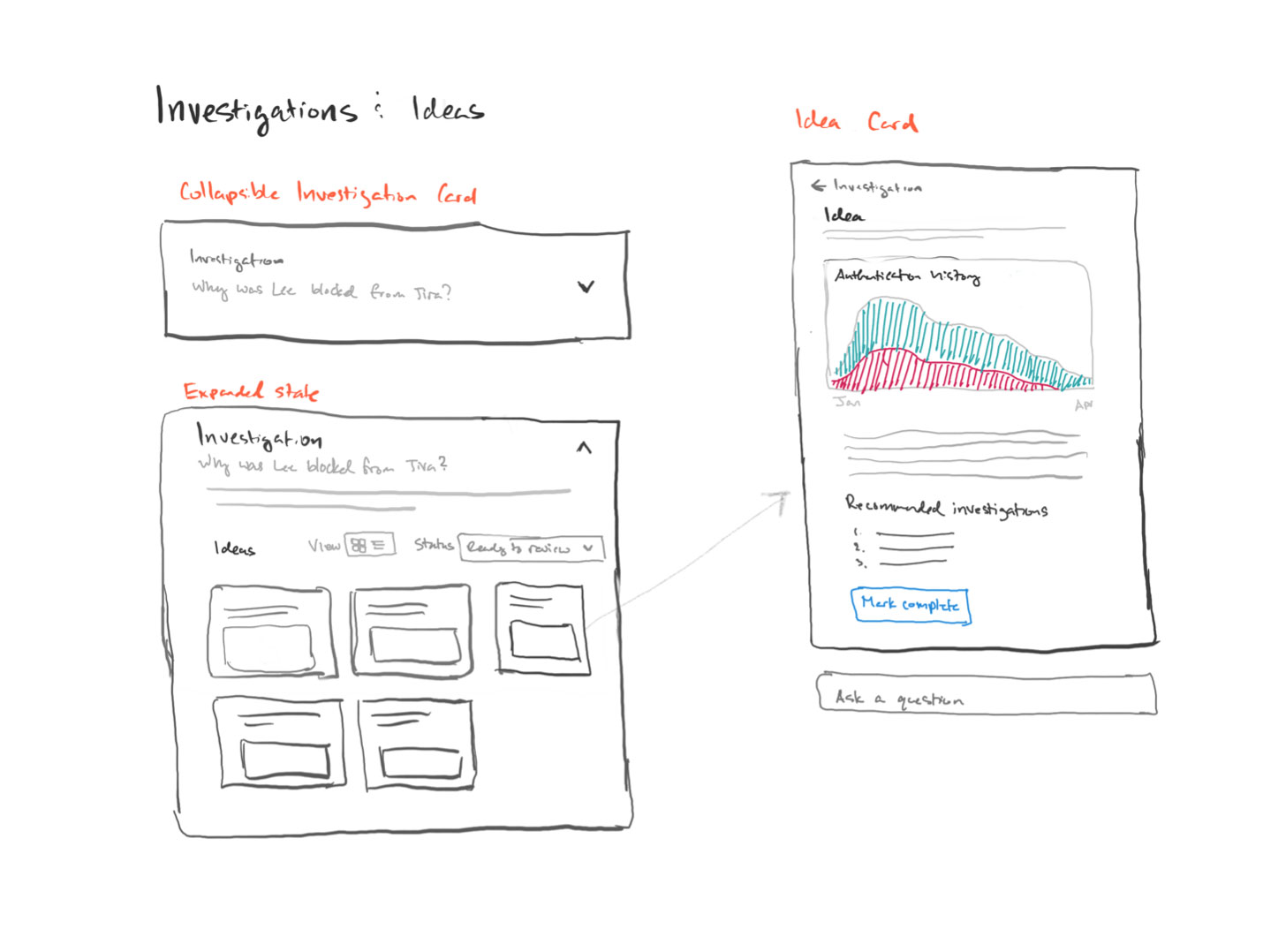

Early Assistant concept depicting how an administrator might investigate end-user authentication friction and explore possible remediation ideas.

We don't want to – well, probably can't – design the full flowchart of all possible conversations with the Assistant. Our users need far more flexibility than we could provide that way. But we also don't want to introduce AI and its complexity and errors when flexibility isn't needed. We have designed Duo's Assistant to be able to seamlessly combine AI with well-designed deterministic tools. This means that as we expand the capabilities of the Assistant, we can choose the right approach for the problem.

04. Prioritizing Security Outcomes

We built Duo's AI Assistant to improve security for customers. The Assistant improves the efficacy and knowledge of admins in configuring the product and in remediating user issues. However, we mustn't only consider the capabilities provided by the Assistant, we must also consider the impact of the entire system on security. This includes threat modeling the Assistant itself, paying close attention to safe and secure data handling, and making sure that usage of the Assistant does not result in unpredicted or unsafe changes.

We prioritize security outcomes over novelty or the use of AI technology for its own sake.

Some decisions in this space are simple. We do not allow the AI Assistant to make any direct changes to customers' environments without administrator review. This ability remains with Duo admins who understand their own contexts, change management procedures, goals, and requirements better than any AI.

Other decisions require the same thorough architectural design and application security review processes we apply to all of our software development. Introducing an AI Assistant means conversation data must be processed and stored. This data can often be sensitive, and contain Personally Identifiable Information (PII). This is why we apply the exact same data governance & privacy frameworks as for all of our other product features, ensuring data is managed responsibly, protected, and in compliance with all relevant regulations. Data processed via the Duo AI Assistant is encrypted both in transit and at rest, indexed at each customer level and is retained within the same geographical region as the customer's location. For more information about this, please read the Duo Privacy Data Sheet. Confidentiality and customer trust is paramount, and we won't let innovative technology overshadow the importance of great security practices.

We also believe that the impact of the Assistant can only fully be understood through real use. The system must work well for a wide variety of users and provide real security value in a tremendous variety of real-world situations. The Duo Assistant provides opportunities for user feedback, as should be standard for any AI system. Reviewing and responding to this feedback is a core part of our R&D process.

05. Coming Soon

Duo's AI Assistant is in a limited Active Development Program today as we work to improve the quality of our ability to interpret prompts from administrators and retrieve the correct tools and generated content. We look forward to bringing a useful, trustworthy AI experience to the Duo Admin Panel next year for all administrators.